|

This feature is designed to accompany the publication of a new article on scoring speaking tests: This feature is designed to accompany the publication of a new article on scoring speaking tests:

Fulcher, G., Davidson, F., and Kemp, J. (2011). Effective rating scale development for speaking tests: Performance decision trees. Language Testing 28(1), 5 - 29.

On this page you will find audio files of some of the transcripts in the article so that you can analyse the samples for yourself. Firstly, we summarize the argument in the paper, before presenting this new approach to scoring performance tests.

Introduction

Speaking tests are normally scored using a rating scale, which is typically a hierarchically ordered set of levels or bands. Each level has a prose descriptor that purports to describe performances typical of the level, and has a score value. Test takers undertake tasks, and their speaking performance is rated against the prose descriptors.

There are essentially two approaches to the development of rating scales have been constructed. The first is the measurement-driven approach, which can further be broken down into two methods: the a-priori or "armchair" method (first named as such in Fulcher (1993)), and the scaling method (eg. the CEFR). The second is the performance data-based approach, which can be broken down into methods that use analysis of actual speech (Fulcher, 1987; 1996), and methods that use human judgments of the quality of language production (Upshur and Turner, 1995). These are described in the article, and in some detail in Fulcher (2010: 208 - 215).

We contend that measurement-driven approaches generate impoverished descriptions of communication, while performance data-driven approaches have the potential to provide richer descriptions that offer sounder inferences from score meaning to performance in specified domains. This is because measurement-driven approaches select descriptors for scales based upon their measurement properties, and largely ignore the question of whether they closely reflect the way in which humans actually communicate.

To demonstrate this we use the example of service encounters. These are defined as any interaction between a service provider and a customer, in which a temporary relationship is established in order to achieve a particular goal. Gabbot and Hogg (2000: 385) refer to this as a "servicescape", in which there are actors, scripts, settings, and the expectation of a happy ending. If you don't believe this, consider comedy! We laugh at this clip from Mr Bean because he violates the expectations of what we do in restaurants. If the waiter were to violate his script in the service encounter, it would be considered exceptionally rude. Indeed, some researchers like Ryoo (2005) have shown that a failure to follow the script of a service encounter can lead to pragmatic and intercultural miscommunication, which in turn can result in customer dissatisfaction and even hostility. Not only are there roles that must be played in this 'dramaturgy', but the power relations in these roles are always unequal, placing the customer in a position of power, and able to control and guide the development of the discourse.

PDTs. In this new article, we demonstrate how the analysis of performance data from travel agency service encounters can be used to construct a new scoring instrument that we call a Performance Decision Tree (PDT). This approach to scoring avoids reified, linear, hierarchical models that purport to describe typical performances at arbitrary levels. Instead, PDTs offer a boundary choice approach on a range of observational discourse and pragmatic variables. The resulting score is more meaningful because its origin lies in performance data, and it can therefore provide rich diagnostic information for learning and teaching. PDTs. In this new article, we demonstrate how the analysis of performance data from travel agency service encounters can be used to construct a new scoring instrument that we call a Performance Decision Tree (PDT). This approach to scoring avoids reified, linear, hierarchical models that purport to describe typical performances at arbitrary levels. Instead, PDTs offer a boundary choice approach on a range of observational discourse and pragmatic variables. The resulting score is more meaningful because its origin lies in performance data, and it can therefore provide rich diagnostic information for learning and teaching.

Data and Description

From an analysis of original data from travel agent service encounters and the literature, we derive the following critical elements involved in succesful interaction, each of which is described in the article. They are listed here, and below we illustrate three with sample data:

A. Discourse Competence

- Realization of service encounter discourse structure (the 'script': Hasan, 1985)

- The use of relational side-sequencing

B. Competence in Discourse Management

- Use of transition boundary markers

- Explicit expressions of purpose

- Identification of participant roles

- Management of closings

- Use of backchannelling

C. Pragmatic Competence

- Interactivity/rapport building

- Affective factors, rituality

- Non-verbal communication

Examples

A2: Use of Relational Side-Sequencing

This is endemic in service encounter discourse. Market researchers refer to this as the 'personalization' of the encounter (Suprenant and Solomon, 1987), and in the language literature it is termed 'relational talk' (Ylanne-McEwen, 2004). It's purpose is to establish rapport (Gremler and Gwinner, 2000) - the temporary personal bond that enables successful service interactions. What happens in relational side-sequencing is that the participants 'step outside' their role as service provider or seeker, and 'realign' themselves as fellow travellers. Listen to this extract from our data in which the travel agent ceases to play the role of travel agent in order to establish a temporary personal relationship when she asks the customer "Have you been to Barcelona before?" (Transcript of this is in the article).

B4: Management of Closings

Managing the end of a service encounter is a complex business (Coupland, 1983), in which proficient speakers explicitly indicate that the interaction is coming to an end. A closing is usually constructed of a pre-closing move such as an evaluation or a proverb or aphorism, followed by identifying the next activity, a reformulation of the conclusion or purpose of the visit, and then the closing itself. Listen to the following extract from our data and read the transcript while listening. Try to identify these features of the closing sequence (notice the aphorism in exchange 16 to help; our full analysis of this is in the article).

Transcript of the Closing

C1: Interactivity/Rapport Building

Usually occuring towards the end of an interaction, prior to the closing, service encounters frequently contain non-transactional rapport-building language designed to indicate that the temporary relationship established is harmonious, the service provider shows that they have taken a personal interest in the customer, and to establish 'similarity'. Listen to this final extract from our data, in which the participants establish their similarity: they share the same gender and interest in shopping, and generate humour through a sterotype. Again, the full transcript is in the article.

The Performance Decision Tree

Through the analysis of each of the categories listed above, and from which I have provided three examples, it is possible to create a Performance Decision Tree from which to score simulated service encounters. This tool would generate a score on a scale, rather then as a "band" or "level". The score is related to the superordinate constructs of discourse and pragmatic competence, and the full explanation of the relationship between constructs and observable variables is provided in Fulcher et al. (2011).

Conclusion

The Performance Decision Tree relates observable variables to models of communicative competence through the analysis of performance data. The meaning of the score can therefore be traced back to actual performance, as well as ability on the constructs of interest. This strengthens the inferences which we draw from score meaning.

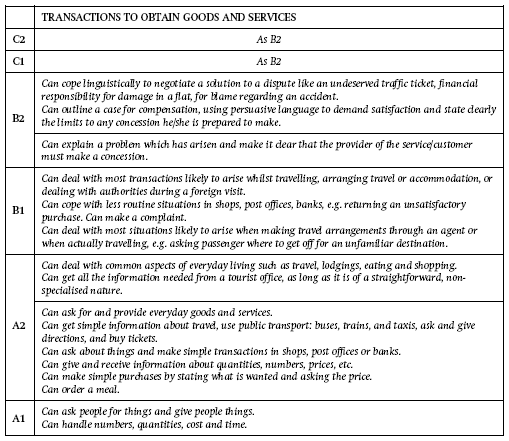

This should be compared with more traditional measurement-driven scales like the following from the Common European Framework (CEFR) (Council of Europe, 2001: 80). Problems we face with scales of this nature include the fact that some descriptors mention specific situations while others do not, contexts of interaction change from one descriptor to another, it appears that 'dealing with travel agents' is an all or nothing ability that suddenly appears at level B1, and that acting as a service provider as well as a service seeker is acquired at level A2. There are many other problems, which you may wish to identify for yourself, or read the full analysis in Fulcher et al. (2011). What is clear is that the illustrative scales from the CEFR are very far from describing either the constructs or the observable variables that make human service encounter interaction so rich.

|

The failure of measurement-driven scales like the CEFR to capture this richness, and their presentation of what we term 'impoverished descriptions' of language, is a direct result of their method of construction, which is described in Fulcher (2003: 107 - 113). The descriptors are there because they could be scaled using teacher perceptions of their difficulty using Rasch. Unlike Performance Decision Trees, these measurement-driven scales are theory-free, and bear little relation to real-world communication. Their growing use in teaching and curriculum design is therefore highly problematic.

Finally, here's another example of how violating a service encounter script results in humour. There is no attempt to establish rapport in this sketch. Our amusement comes entirely from knowing what the rules of interaction are, and recognizing that they are being blatently flouted.

Activity

Try recording a number of short interactions when you visit shops. Describe the discourse structure of the interactions and their components. [Note that recording without permission is ethically questionable, and in many countries is also illegal. If you try this activity ensure that you seek permission before recording, and inform anyone who is not aware of the presence of the recorder after the event so that they have the option to ask you to delete the material.] When you have established a typical script for the interaction and the nature of the language used, try manipulating the language or elements of the interaction for comic effect. What does this tell us about the nature of service encounter discourse?

References

Council of Europe (2001). Common European Framework of Reference for Languages: Learning, teaching and assessment. Cambridge: Cambridge University Press.

Coupland, N. (1983). Patterns of encounter management: Further arguments for discourse variables. Language in Society, 12, 459 - 476.

Fulcher, G. (1987). Tests of Oral Performance: the need for data-based criteria. English Language Teaching Journal 41, 4, 287 - 291.

Fulcher, G. (1993). Construction and Validation of Rating Scales for Oral Tests in English as a Foreign Language. Unpublished PhD thesis: Lancaster University, UK.

Fulcher, G. (1996). Does thick description lead to smart tests? A data-based approach to rating scale construction. Language Testing 13(2), 208 - 238.

Fulcher, G. (2003). Testing Second Language Speaking. London: Longman/Pearson.

Fulcher, G. (2010). Practical Language Testing. London: Hodder Education.

Fulcher, G., Davidson, F., and Kemp, J. (2011). Effective rating scale development for speaking tests: Performance decision trees. Language Testing 28(1), 5 - 29

Gabbott M, Hogg G (2000). An empirical investigation of the impact of non-verbal communication on service evaluation. European Journal of Marketing, 34(3/4), 384-398.

Gremler, D. D. and Gwinner K. P. (2000). Customer-employee rapport in service relationships. Journal of Service Research, 3(1), 82 - 104.

Hasan, R. (1985). The structure of a text. In Halliday, MAK & Hasan, R. (1985). Language, context, and text: Aspects of language in a social-semiotic perspective. Victoria, Australia: Deakin University Press, 52 - 69.

Ryoo H-K (2005). Achieving friendly interactions: a study of service encounters between Korean shopkeepers and African-American customers. Discourse and Society, 16(1), 79 - 105.

Suprenant, C. F. and Solomon, M. R. (1987). Predictability and personalization in the service encounter. Journal of Marketing, 51(2), 86 - 96.

Upshur, J. and Turner, C. (1995). Constructing rating scales for second language tests. English Language Teaching Journal, 49(3), 3 - 12.

Ylanne-McEwen, V. (2004). Shifting alignment and negotiating sociality in travel agency discourse. Discourse Studies, 6(4), 517 - 536.

Glenn Fulcher

March 2011

|